DevOps

An article by Andreas Kuhn

– What is DevOps?

The goal of DevOps is to achieve a shared responsibility between 2 traditionally separate areas within IT – development (DEV) and operations (OPS).

Both areas pursue different goals. Therefore the relationship between the two areas is tense.

Due to increasing competition in ever shorter frequencies, the company demands new functionalities within its software packages, which increases the pressure on development (time to market). Developers have to provide software much faster than before, in considerably shorter release cycles, and then make this software available to the company in a productive environment.

This is where operations come into play whose most important task is to ensure that the software functions stably within the productive environment, even under varying loads. Once an environment is running, it should not be touched if possible – after all, the company is also held responsible if the software does not do what it should do.

The motto here is often: “never touch a running system”. If errors occur during operation, this is of course primarily the fault of the developers, who once again have not tested new features properly.

What I have described here is also known as “shame game” or “blaming culture” – the constant conflict between development and operation. It seems to be a law of nature.

The goal of the DevOps movement is to resolve this supposed contradiction. Development and operation are to be merged into a powerful unit in order to be able to provide software faster, more securely – to deliver real added value for the company.

For this to succeed, a new culture, a common understanding must be developed within the company. John Willis, one of the veterans of the DevOps movement, names 5 points that must be present in the company for DevOps to work at all.

Both departments Dev and Ops must grow together -> DevOps. This is only possible on the basis of mutual trust, information flow and willingness to learn.

“Automate everything” – everything should be automated:

Bottlenecks should be identified and eliminated – instead: Generation of added value, transparency – principles of flow should be realized.

Evaluation criteria must be created and also constantly measured (e.g. non-functional requirements) – response times of a web application, test coverage, code verification (e.g. sonarcube).

In general, the greatest added value for the company is achieved when knowledge is shared. There must be a willingness to learn from each other.

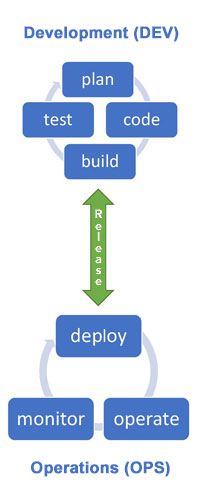

– Software cycle in DevOps

The following diagram is important:

Both Dev and Ops have their own cycle. Both cycles are connected by releasing software packages. The release arrow points in both directions, so that both circuits always flow into each other. If something does not work in production, the information flows immediately to development. Since Dev and Ops now work in the same team, this information is discussed promptly within the team and an appropriate response can be made as quickly as possible.

– Advantages of the DevOps approach

Speed

Processes are accelerated – outwardly: customers get innovative solutions faster.

Reliability

Through process automation: packaging, provision and testing, transparency is created. In addition, this is the cornerstone for repeatable and ultimately less error-prone execution.

Scalability

By using virtualization technologies, companies are able to scale faster and qualitatively. In the event of bottlenecks, it is no longer necessary to buy a new physical server. Instead, a new virtual machine is set up. The description of the virtual machine is nowadays version-managed and stored in the form of description languages – “infrastructure as code”. The advantage of this approach is that any number of identical environments can be set up error-free in a much shorter time.

Improved collaboration

DevOps teams share responsibility for the software both in development and operation.

Security

The quality of the software will increase due to automated tests. In addition to the well-known test pyramid: unit test, integration test, acceptance test, a new movement is emerging: DevSecOps.

- The DevOps team will also integrate IT security within the framework of the cycles – automated if possible as well as through additional, dedicated manpower.

- Examples for DevSecOps

- Automated code – vulnerability assessment

- Automated security / penetration tests

- Definition of Quality Gates

- Auditing logging of all automated processes – Traceability

– DevOps Best Practises

The following DevOps Best Practices help to realize the benefits listed above:

Continuous Integration

- When the developers version code, an automated process consisting of unit test, code quality check, packaging, etc. is automatically carried out through the interaction of the version management system and a CI-Server such as Jenkins.

- The code is continuously tested in this way. The package is constantly being rebuilt. Problems with the integration of code are detected early and reported back to the developers (feedback loop).

Scalability

A CI-Server like Jenkins, which automatically takes the software artifacts and automatically installs them on different target platforms, can also support this.

Microservices

A micro service is a service that ideally does only one thing and this with very here quality. A software application is based on a micro services architecture and consists of many micro services that represent the overall functionality of the application by means of orchestration. This is in contrast to a monolithic application, which consists of one large software artifact. The advantage of microservices is that they can be installed separately and thus provide new functionalities. Companies such as Amazon and Netflix have shown that it is possible to perform thousands of deployments per day and thus to constantly update / extend the functionalities. Even in the case of an error, not the entire functionality of an application is affected (not available), but only a part, represented by a micro service.

Logging / Monitoring

The automated processes should include logging. This ensures traceability. Only versioned artifacts may be processed. Additionally, a monitoring system should be established.

- APM – Application and performance monitoring

- Measurement of response times, database accesses, identification of load peaks

- Tool: e.g. app dynamics, Dynatrace

- End-User Monitoring

- Application logs / APM monitoring link

- Synthetic Monitoring

- Health check, liveness checks

- Availability of the application

- Support in WebApps through Selenium, CI-Server

- Log Monitoring

- Application logs, web server logs, OS logs

- Identification, assignment to servers is important

- Server ID, session IDs, artificial transaction IDs

- Automated collection of logs from all servers when the application is running in a cluster

- Tool: ELK-Stack – elastic search / kibana / stash

- OS Monitoring

- Hardware metrics

- establish baselines

Collaboration: collaboration and communication

Good, interdepartmental communication is the basis for DevOps corporate culture

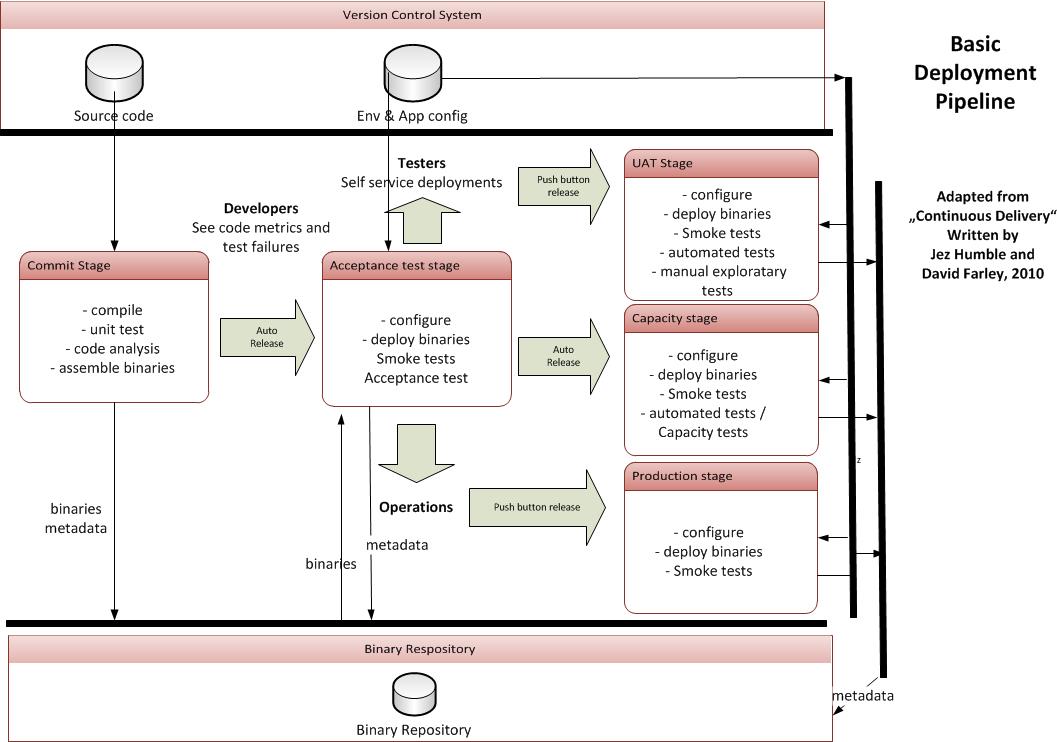

– Deployment Pipeline

The automated build and deployment processes (Continuous Integration, Continuous Delivery) are visualized in the subsequent deployment pipeline. The pipeline shown is a proposal. It can vary depending on the company and project requirements. The Stages: Commit, Acceptance Stage as well as the production are to be understood as minimum requirements. If necessary, projects can also include further stages depending on the requirements.

The important thing here is the interaction:

The process begins with a developer checking source code into the version control system. The version control system is connected to a CI-Server – Continuous Integration Server such as Jenkins. The CI-Server is not included in the graphic for reasons of clarity.

The version control system informs the CI-Server via a WebHook that code has been re-versioned. Thereupon the CI-Server pulls the newly versioned code from the version control system and starts with the actions described in the commit stage.

If the actions in the commit stage are all successful, the CI Server starts an automated process again. It pulls the binaries generated in the commit stage and installs them in the acceptance test stage. Furthermore, the CI Server retrieves the corresponding configuration from the version control system and installs it as well.

An error can occur in the commit stage, for example, if unit tests fail. Here there are possibilities to define threshold values in the CI-Server, which then ultimately decide which action is to be followed by the next – deployment to the next stage or notification to the developers of no further automated actions.

From the Acceptance Test Stage, the process then proceeds automatically to the Capacity Stage.

Testers have the possibility to select a version and install it on the UAT Stage and then test it.

Andreas Kuhn

Senior IT Consultant